Rüschlikon (CH), Starnberg, 18./20. April 2018 - Übergang zu einer Non-von-Neumann-Architektur, in der Memory und Data-Processing in einer bestimmten Form koexistieren...

Zum Hintergrund: Wissenschaftler des IBM Forschungszentrums bei Zürich haben am 18. April 2018 in Nature Electronics einen Aufsatz veröffentlicht (1), in dem sie ein neues hybrides Rechnerkonzept vorstellen. Dieser erstmals gezeigte Ansatz von IBM Research kombiniert die klassische von-Neumann-Computer - Architektur mit einer sogenannten Computational Memory Unit. Einige wichtige Details finden Sie aus Aktualitätsgründen hierzu nachfolgend in der kurzen englischsprachigen Zusammenfassung von IBM Research.

IBM Scientists Demonstrate Mixed-Precision In-Memory Computing for the First Time

One of the biggest challenges in using these huge volumes of data is the fundamental design of today’s computers, which are based on the von Neumann architecture, requiring data to be shuttled back and forth at high speeds — an inefficient process, particularly for this design. The clear answer is to transition to a non-von Neumann architecture in which memory and processing coexist in some form – a radical departure inspired by the way the human brain works.

One promising approach being explored by IBM researchers in Zurich, Switzerland, is known as in-memory computing in which nanoscale resistive memory devices, organized in a computational memory unit, are used for both processing and memory.

However, to reach the numerical accuracy typically required for data analytics and scientific computing, new challenges emerge that arise from device variability and non-ideal device characteristics.

Appearing today in the peer review journal Nature Electronics, IBM scientists introduce a novel hybrid concept called mixed-precision in-memory computing which combines a von Neumann machine with a computational memory unit. In the paper the team demonstrates the efficacy of this approach by accurately solving systems of linear equations. Specifically, they solved a system of 5,000 equations using 998,752 phase-change memory (PCM) devices.

In this hybrid design, the computational memory unit performs the bulk of the computational tasks, whereas the von Neumann machine implements a method to iteratively improve or refine the accuracy of the solution. The system therefore benefits from both the high precision of digital computing and the energy/areal efficiency of in-memory computing.

Memristive Devices

The basis of the technology are memristive devices, which can store data in their conductance states and can remember the history of the current that has flowed through them. The challenge with such devices is that they suffer significant inter-device variability and inhomogeneity across an array of cells and a randomness that is intrinsic to the way the devices operate, making it prohibitive for many practical applications.

The solution according to IBM scientists is to counter the shortcomings of each system by combining the old with the new.

Comment from IBM Research: “The concept is motivated by the observation that many computational tasks can be formulated as a sequence of two distinct parts,” said IBM researcher Manuel Le Gallo, lead author of the paper. “We start with the high computational load using the memristive device for the approximate answer and then the lighter compute is done with the von Neumann device, which takes the resulting error and calculates it accurately. It’s the perfect marriage.” “In this way the system can benefit from an overall high areal and energy efficiency, because the bulk of the computation is still realized in a non-von Neumann manner, and yet can still achieve an arbitrarily high computational accuracy” said IBM scientist Abu Sebastian, co-author and one of the initiators of this work.

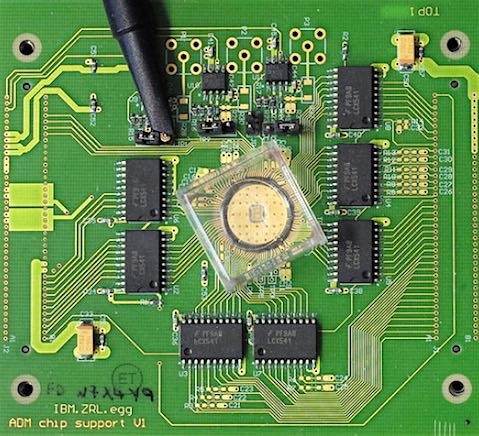

Abb. 1: PCM Device (Bildquelle: IBM Research)

Phase Change Memory

This work benefits from over a decade of research and development at IBM with devices known as phase change memory (PCM). PCM devices are resistive memory devices that can be programmed to achieve a desired conductance value by altering the amorphous (melting) and crystalline (hardening) phase configuration within the device. For the paper the team used a prototype chip with million PCM devices consisting of an array of 512 word lines x 2048 bit lines integrated in 90 nm CMOS technology.

To test the design, the team used real world data from RNA expression measurements of genes obtained from cancer patients, publicly available from The Cancer Genome Atlas, with a focus on 40 genes reported in the manually curated autophagy pathway of the Kyoto Encyclopedia of Genes and Genomes. Autophagy is particularly interesting to study because it acts as a tumor suppressor as well as an enabler, aiding tumors to tolerate metabolic stress.

In the demonstration to infer and compare the networks of gene interactions from normal and cancer tissues the team calculated the partial correlations between the genes by computing the inverse covariance matrix, a statistical tool which shows the dispersion of variables around the mean average.

The research team programmed covariance matrix in the PCM chip with mixed-precision in-memory computing to solve 40 linear equations, repeating it for both cancer and normal tissues. The resulting networks of gene interactions were identical to those computed entirely in software with double precision.

The team measured the energy consumption of the mixed-precision in-memory computing system, implementing all data conversions and data transfers between the computational memory and the high precision digital computing unit. The computational memory unit was simulated with various degrees of device variability, and both an IBM POWER8 CPU and a NVIDIA P100 GPU were tested as high precision computing units. Maximum measured energy gains with respect to the CPU-based and GPU-based implementations ranged from 6.8x with device variability comparable to that of the current prototype PCM chip, up to 24x when assuming reduced device variability that could be achieved in future optimized chips.

The next steps will be to generalize mixed-precision in-memory computing beyond the application domain of solving systems of linear equations to other computationally intensive tasks arising in automatic control, optimization problems, machine learning, deep learning and signal processing.

“The fact that such a computation can be performed partly with a computational memory without sacrificing the overall computational accuracy opens up exciting new avenues towards energy-efficient and fast large-scale data analytics. This approach overcomes one of the key challenges in today’s von Neumann architectures in which the massive data transfers have become the most energy-hungry part, said IBM Fellow Evangelos Eleftheriou, a co-author of the paper: “ Such solutions are in great demand because analyzing the ever-growing datasets we produce will quickly increase the computational load to the exascale level if standard techniques are to be used.”

Research Team / Autoren (1): Manuel Le Gallo, Abu Sebastian, Roland Mathis, Matteo Manica, Heiner Giefers, Tomas Tuma, Costas Bekas, Alessandro Curioni and Evangelos Eleftheriou.

(1) Quelle > Mixed-Precision In-Memory Computing. Link zum Beitrag in Nature Electronics (Hinweis: Der Beitrag bei Nature ist kostenpflichtig)